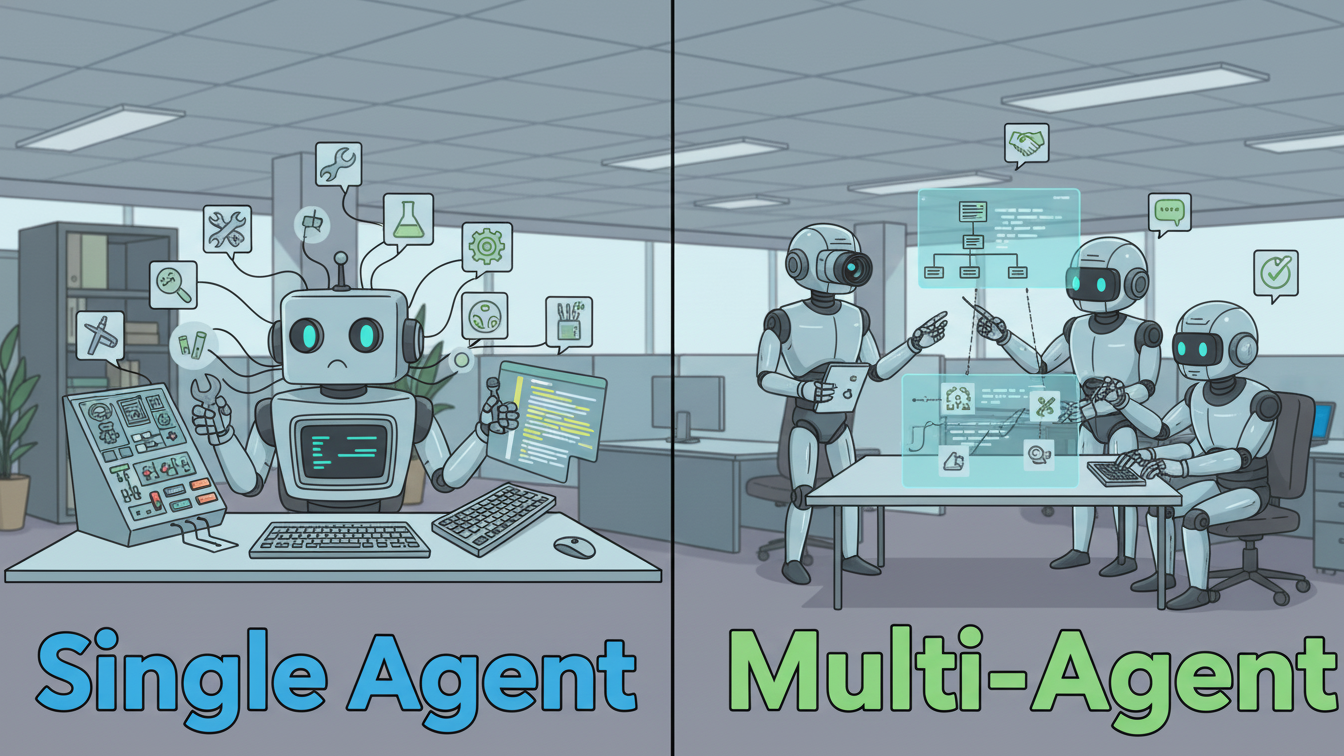

As artificial intelligence continues to evolve, two architectural approaches dominate many of today’s systems: single-agent and multi-agent AI. Understanding the differences between these approaches helps engineers, researchers, and business leaders choose the right design for automation, decision-making, and complex real-world tasks.

This article breaks down what these two system types are, how they work, where they excel, and when you should (or shouldn’t) use them.

1. What Is a Single-Agent System?

A single-agent system is an AI setup where one autonomous agent interacts with an environment to achieve a goal. The agent senses input, processes information, decides what to do, and takes actions—all by itself.

Key Characteristics

- A single decision-making entity

The entire system’s intelligence is centralized in one agent. - Clear objective

It usually optimizes one goal or a small set of goals (e.g., maximize reward). - Predictable environment assumptions

Often designed for environments that are stable, fully observable, or controlled. - Less complexity

Fewer moving parts mean simpler algorithms, easier debugging, and lower computational costs.

Examples

- A chess-playing AI like classical AlphaZero (only one agent plays against a modeled opponent).

- A robot vacuum mapping and cleaning a home.

- A recommendation engine providing suggestions to a user.

- A single chatbot designed to handle one conversation at a time.

Strengths

- Easy to build, train, and maintain.

- Good for well-structured problems.

- Algorithms like reinforcement learning, planning, or supervised models work well.

Limitations

- Struggles with large, dynamic, or complex environments.

- Cannot collaborate or coordinate with other autonomous entities.

- Becomes a bottleneck when many tasks or domains are required.

2. What Is a Multi-Agent System (MAS)?

A multi-agent system includes multiple autonomous agents interacting with each other and with their environment. Each agent may have its own knowledge, goals, and capabilities.

These agents may cooperate, compete, or even act independently while pursuing their own objectives.

Key Characteristics

- Decentralized decision-making

Intelligence is spread across agents instead of living in one central model. - Communication and coordination

Agents can share information, negotiate, or plan together. - Emergent behavior

Complex outcomes arise from simple agent interactions. - Scalability

More agents can be added to tackle bigger environments.

Examples

- Swarm robotics (drones coordinating to map terrain or deliver goods).

- Traffic-management systems with many smart vehicles.

- Multiplayer game AI agents interacting dynamically.

- Modern AI orchestration frameworks (e.g., multi-agent LLM systems where different AI models handle different tasks).

Strengths

- Handles complex, dynamic, and large-scale environments.

- Enables specialization (each agent can focus on one role).

- Better fault tolerance—if one agent fails, others can compensate.

- Supports collaborative intelligence (e.g., problem-solving teams of AIs).

Limitations

- Much more complex to design and coordinate.

- Requires communication protocols and negotiation strategies.

- Can lead to unintended emergent behaviors (both good and bad).

- Harder to ensure stability or predict outcomes.

3. Key Differences at a Glance

| Aspect | Single-Agent | Multi-Agent |

|---|---|---|

| Number of decision-makers | One | Many |

| Complexity | Lower | Higher |

| Scalability | Limited | High |

| Coordination Needed | None | Often required |

| Behavior | Determined, predictable | Emergent, sometimes unpredictable |

| Suitable Environments | Static, simple | Dynamic, distributed, or large-scale |

| Examples | Chatbot, vacuum robot | Drone swarm, distributed AI, autonomous fleets |

4. Choosing Between Single-Agent and Multi-Agent Systems

The choice depends heavily on the problem structure.

Use a Single-Agent System When:

- The task is simple or self-contained.

- A single perspective provides enough information.

- Predictability and control are more important than adaptability.

- You want lower computational and design overhead.

Examples: a solitaire game AI, a personal assistant, a forecasting model.

Use a Multi-Agent System When:

- The environment is large, dynamic, or distributed.

- Tasks require collaboration, negotiation, or competition.

- You want specialized agents tackling different parts of a problem.

- You need scalability and resilience.

Examples: smart city systems, distributed robotics, multi-LLM orchestration.

5. How These Concepts Apply to Modern AI (Especially LLMs)

With the rise of large language models (LLMs) and autonomous agents, multi-agent systems have become a major trend. Instead of building one giant monolithic AI, developers often create multiple specialized agents, each handling tasks like planning, coding, research, or verification.

This approach mirrors how human teams work—specialization + coordination = better outcomes.

However, single-agent LLMs are still extremely powerful and are often sufficient for linear tasks, personal assistance, or domain-specific problem solving.

Modern examples:

- Single-agent: GPT as a personal assistant answering a question.

- Multi-agent: A team of AIs where one plans, one searches the web, one writes code, and one tests it.

6. The Future: Hybrid Architectures

Most real-world systems today and in the future will blend both approaches:

- A single core AI (like a large LLM)

+ - Multiple surrounding tools or sub-agents for specialized tasks

This hybrid model provides:

- The simplicity of a single central agent

- The power and scalability of multi-agent cooperation

Expect increasing use of:

- AI swarms

- Agent hierarchies

- Multi-model collaboration

- Distributed AI decision-making

Conclusion

Single-agent AI systems are simple, predictable, and ideal for focused tasks.

Multi-agent AI systems shine in complex, dynamic, and large-scale environments where coordination or specialization is valuable.

The future of AI is not “one or the other”—it’s a blend. As systems grow more capable, we will rely on a combination of centralized intelligence and distributed agents to achieve powerful, efficient, and human-like collaboration across tasks.

Responses (0)

Be the first to respond.